[k8s][vSphere with Tanzu] spec.loadbalancerclass. What is it and how could it be useful.

[k8s][vSphere with Tanzu] spec.loadbalancerclass. What is it and how could it be useful.

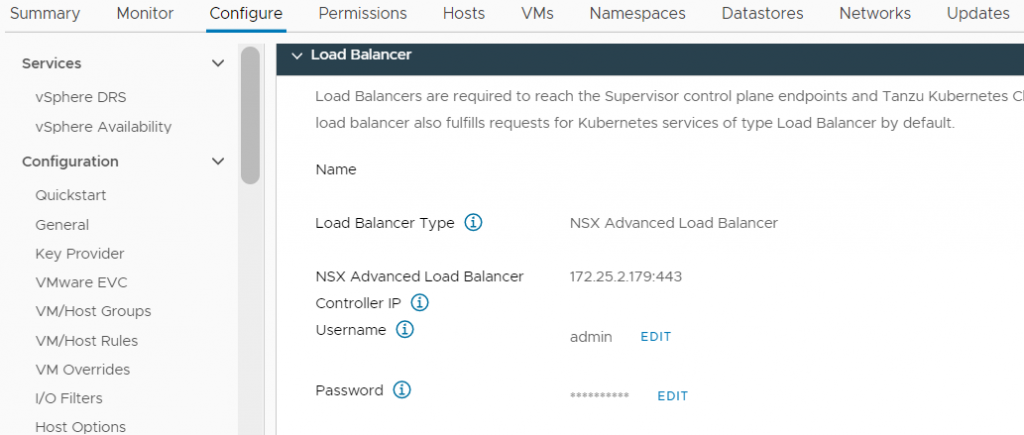

When we deploy our vSphere with Tanzu environment, one of the steps to enable Workload Management is to specify our load balancer.

This can be the standard NSX load balancer, the NSX Advanced Load Balancer (AVI) or HAProxy, and will be the load balancer through which all the clusters we create from vSphere with Tanzu will expose services regardless of the namespace they are in, transparently to the cluster and without the need to install any add-on.

But what if we want to use an on-demand load balancer in our cluster? Let’s suppose that for whatever reason we want to expose certain services through a different load balancer than the one we used to configure Workload Management, for example a different instance of NSX ALB (AVI) connected to different networks in an isolated environment, or a load balancer from a third party.

In that case, we will install the controller corresponding to our balancer inside the cluster, but… What will be the surprise when the time comes to expose? The default load balancer will continue to work together with the newly installed controller, so we will have duplicated services as they will be exposed in both at the same time as I show in the following example:

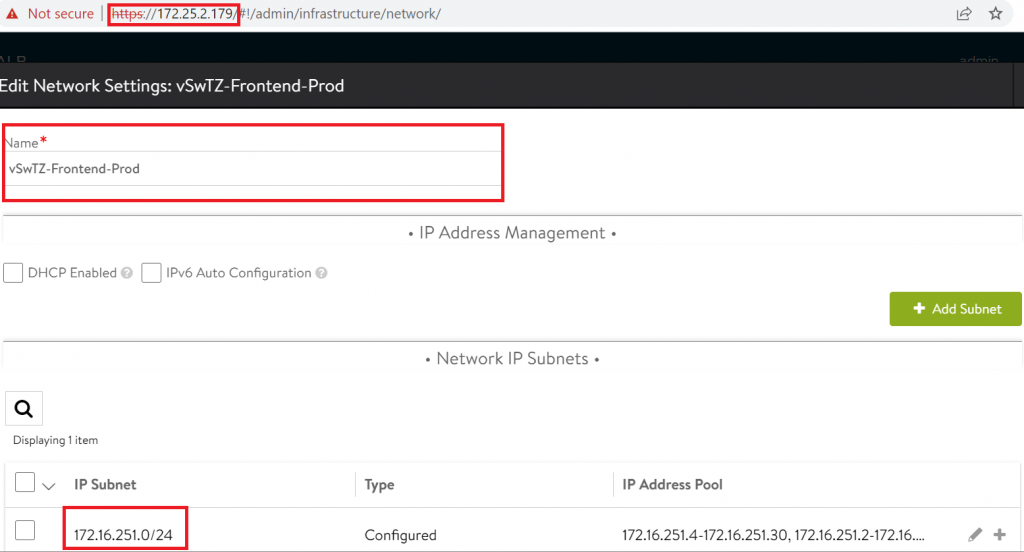

As you can see in the screenshot above, the LB configured in my vWTZ cloud is on IP 172.25.2.179, and it is also configured to expose services over the prod network (172.25.251.0/24).

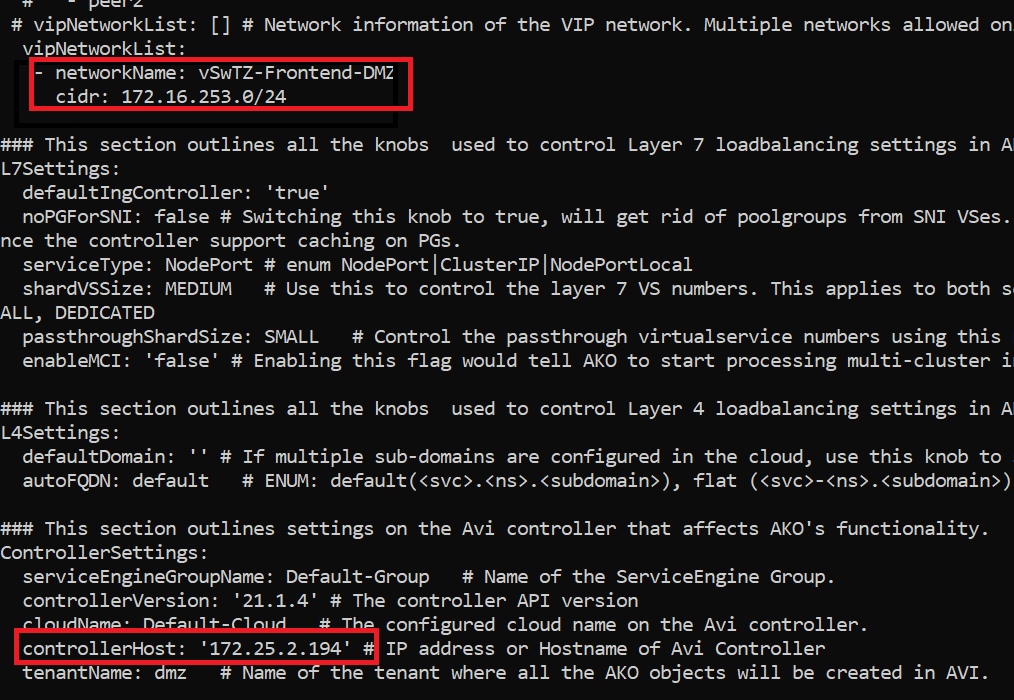

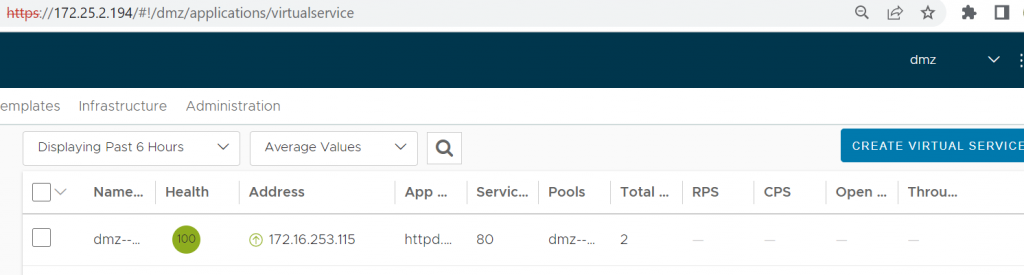

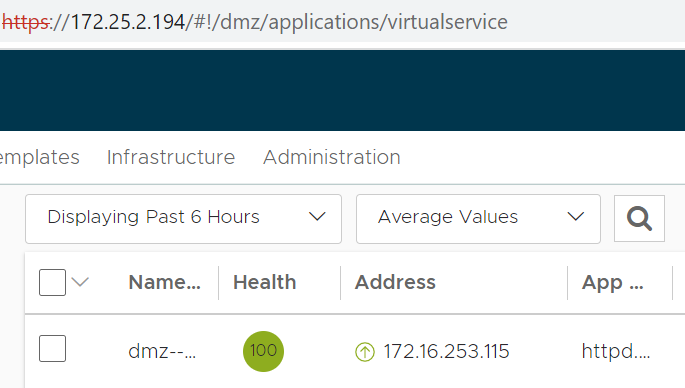

While in my cluster, I have deployed AKO and connected it to a different instance of AVI deployed on IP 172.25.2.194 to publish to a frontend network of a different DMZ environment than the one configured in the first one, which is production.

But, ¿What happens when we try to expose a service?

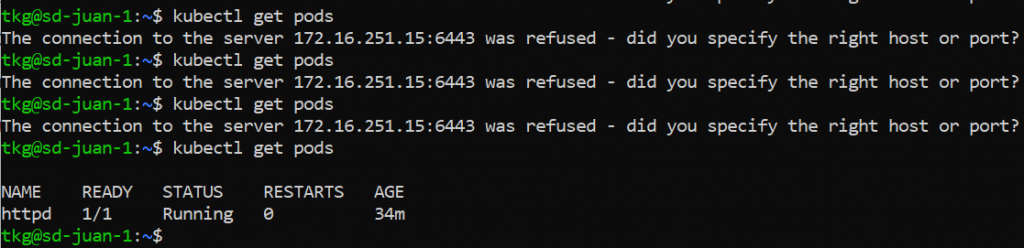

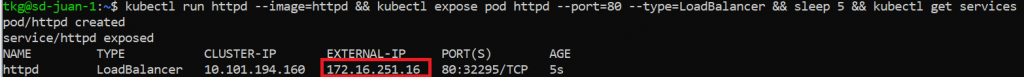

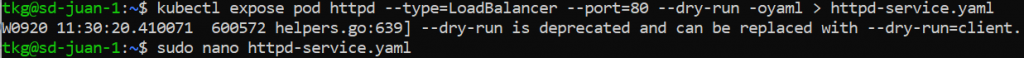

Let´s create an apache pod and expose it:

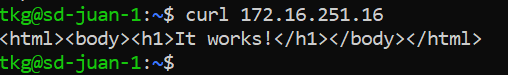

As you can see, the pod has been exposed by the LB provided by the cloud instead of the one installed on-premises. But… Is this really the case? let’s review:

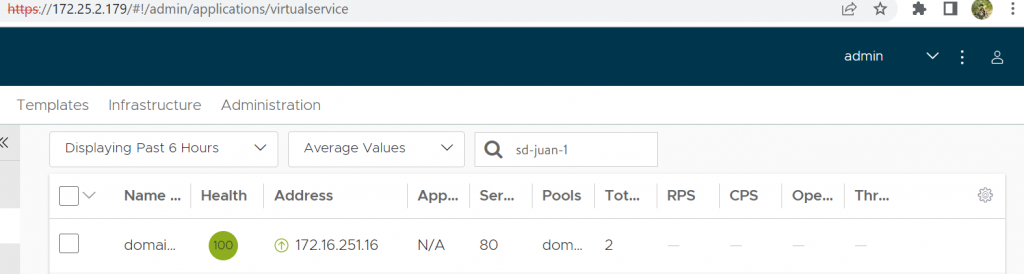

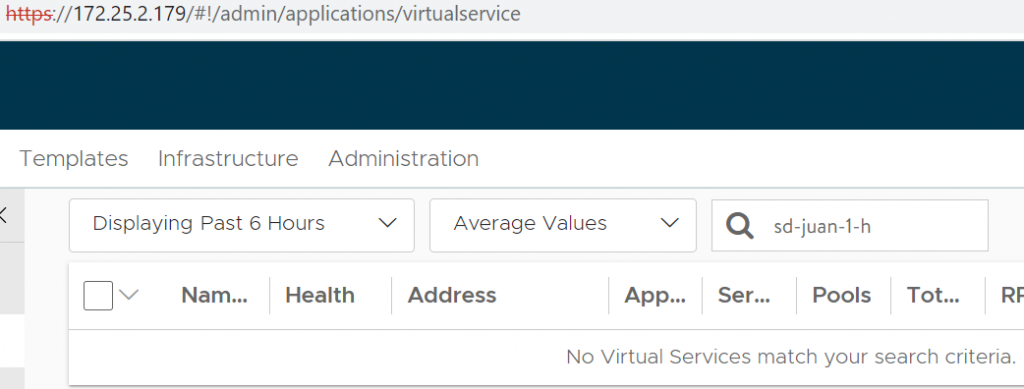

But what about our second instance of load balancer?

To avoid this problem, after diving into the Kubernetes.io documentation, I came across the “spec.LoadBalancerClass” feature that seemed to narrow down my problem and be a possible solution:

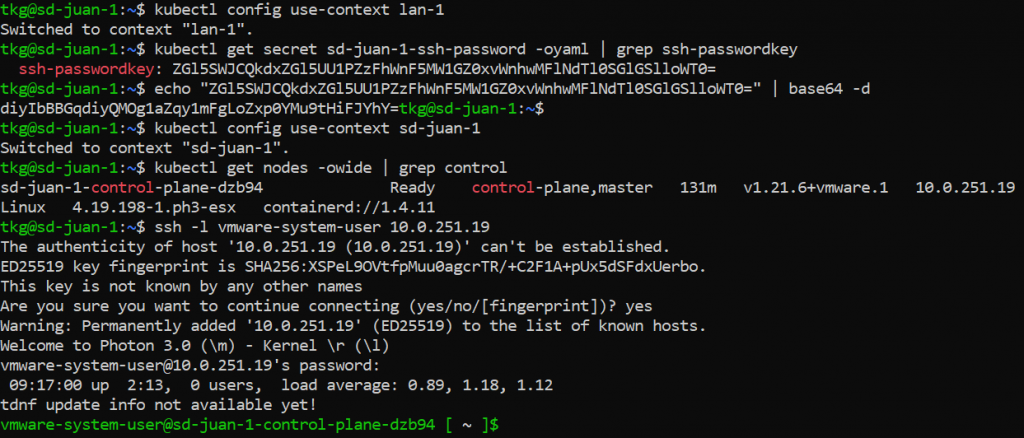

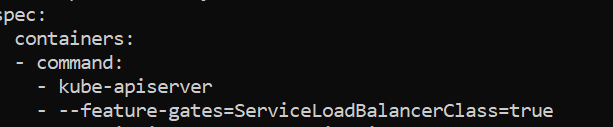

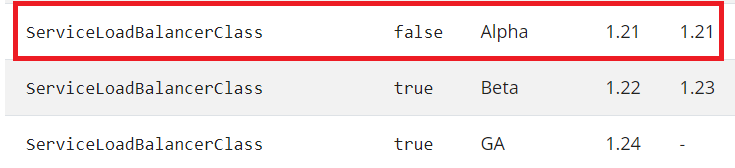

However, for this feature to work, it is necessary to have the feature-gate “ServiceLoadBalancerClass” enabled in our kube-apiserver. This feature gate was introduced with kubernetes 1.21 and its default in this version is disabled as it is an alpha, from kubernetes 1.22 onwards, it is enabled by default.

In my case, as my cluster runs kubernetes 1.21, I need to enable the feature gate to be able to use the feature (this is not officially supported by Tanzu, so it should not be done in production environments).

If in your case your implementation is higher than k8s 1.22, you can skip this section.

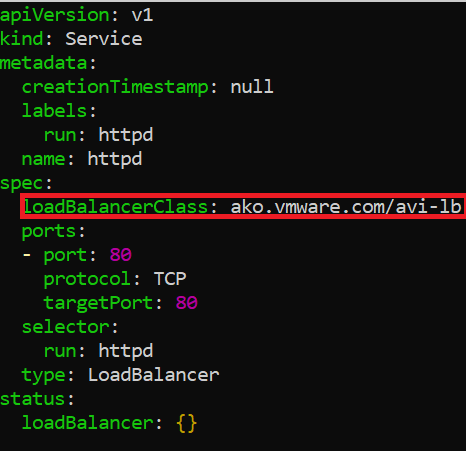

Let’s test the feature, trying to expose the same service as before, but using a loadBalancerClass, in my case, as I am using AVI, my class is “ako.vmware.com/avi-lb”.

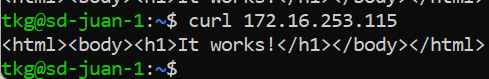

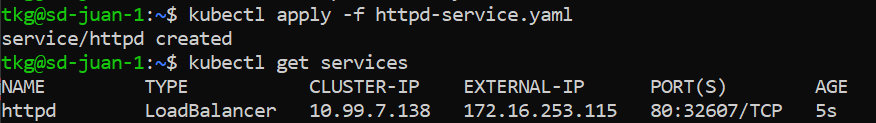

As you can see, in this case, the service has been exposed only by the on-premises LB and has not been duplicated by the LB given by the cloud, so with this feature we can choose to which LB we want the services to be exposed.

As always, I hope you found it useful and enjoyed it as much as I did, so don’t hesitate to comment on any suggestions or questions!