[NSX ALB][vSphere with Tanzu] Using markers to choose a VIPnetwork from the IPAM

[NSX ALB][vSphere with Tanzu] Using markers to choose a VIPnetwork from the IPAM

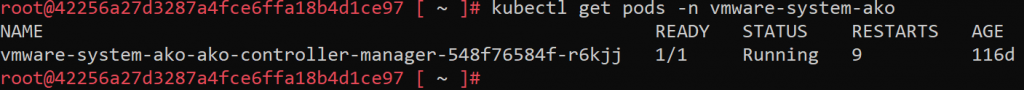

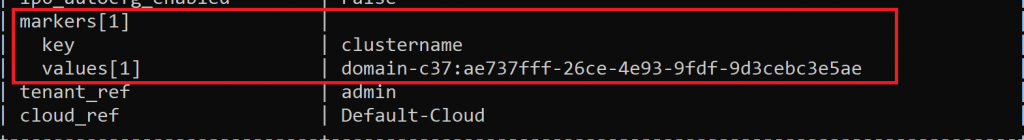

When we deploy vSphere with Tanzu using the NSX Advanced Load Balancer as load balancer, we can choose our Workload networks, but we cannot choose our Frontend networks, as these are assigned directly from the IPAM Profile we have configured in our ALB.

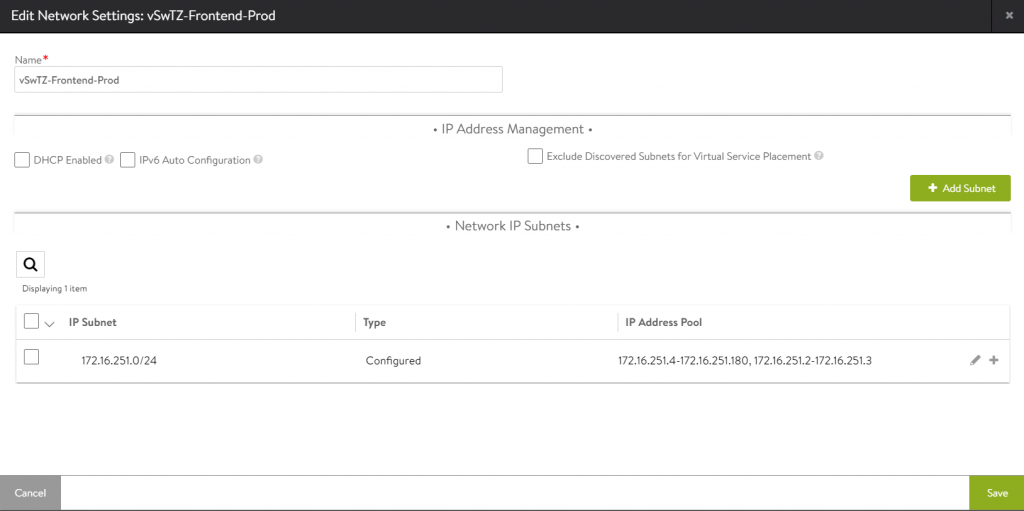

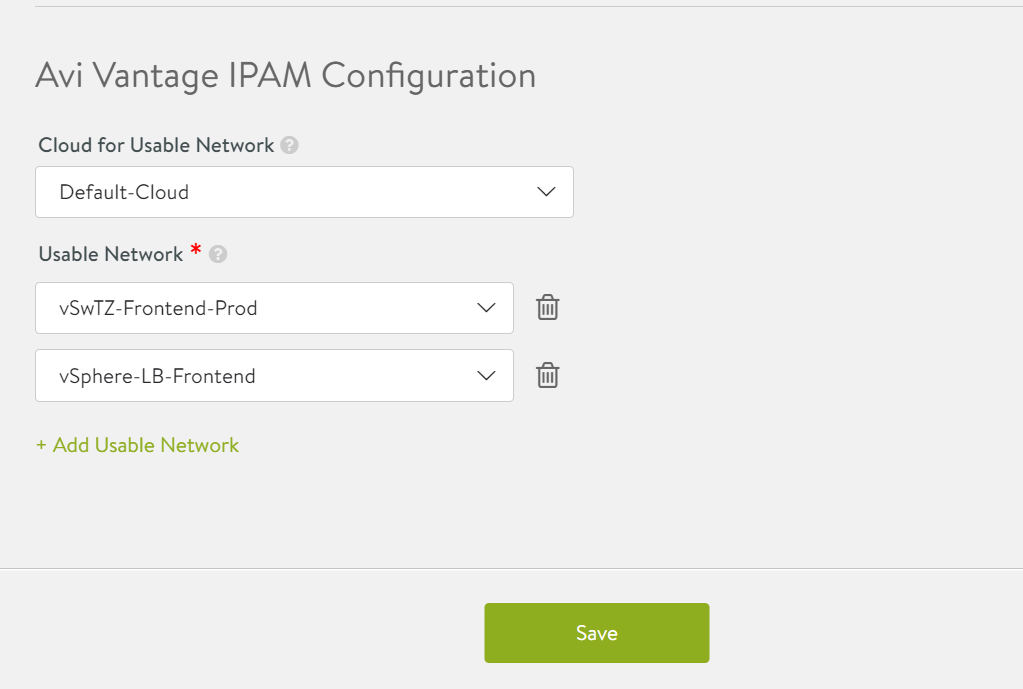

But what if we are using ALB for more than one purpose and we have an IPAM with several networks? For example, we have one network for Tanzu and another for balancing conventional VM services:

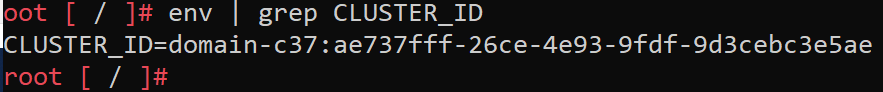

In AKO standalone deployments we can use the vipNetworkList field to choose which one we want to be our frontend network, but this option is not available when we deploy Vspehere with Tanzu using NSX ALB as load balancer, so when we deploy APIservers and publish services from our cluster, a Round-Robin will be performed randomly assigning IPs in those networks that we have configured in our IPAM.

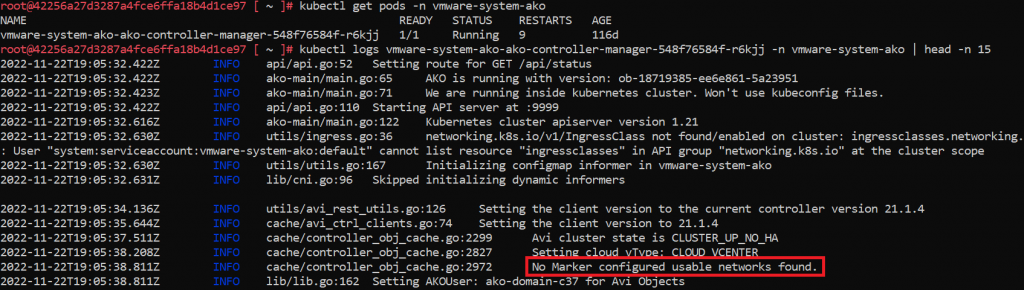

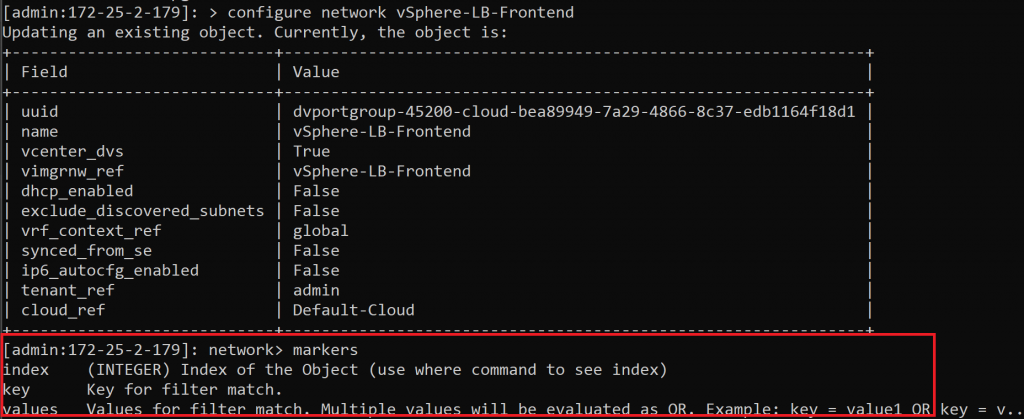

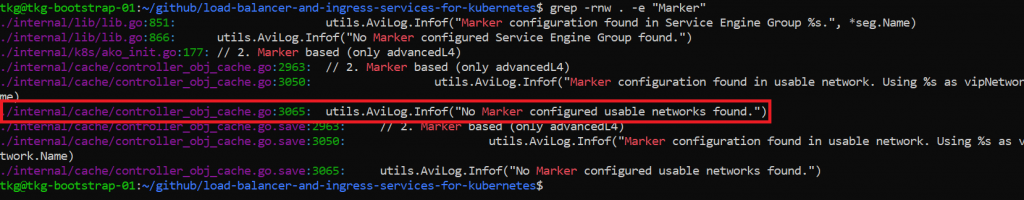

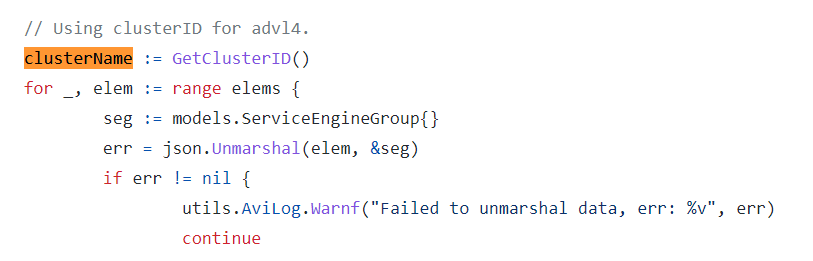

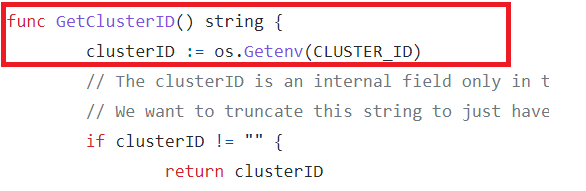

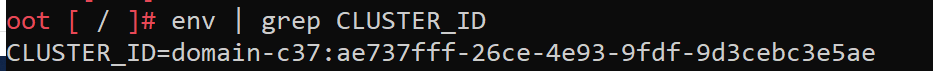

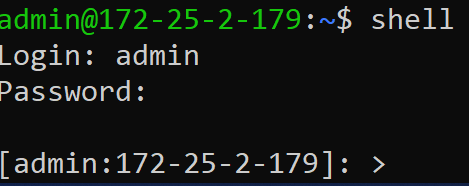

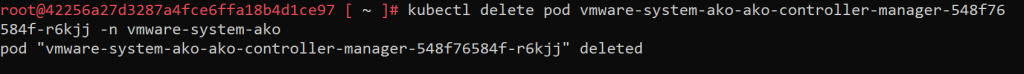

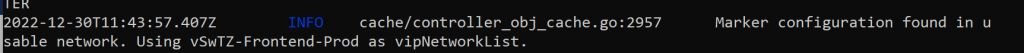

Recently, I was given a case like this in which a customer had this problem and I was not able to solve it, so I got down to work and after reviewing the documentation of VMware and AVI Networks I could not find an answer to the problem, so I decided to take the source code from AKO (Github) and try to find the answer myself:

As always, I hope you liked it, see you soon!